Balancing Act: Delivering on Britain's Future Energy

We transformed a failing £630M critical infrastructure program from distributed monolith chaos to reliable microservices delivery, enabling NESO's Open Balancing Platform to launch one week early and save UK consumers £15M annually.

PISR: Problem, Impact, Solution, Result

-

Problem: The UK's National Energy System Operator was building the Open Balancing Platform (OBP) to replace a 1990s energy grid balancing system, but the £630M programme was experiencing significant challenges. Despite microservice architecture intentions, teams were deploying breaking changes that created a distributed monolith with cascading failures, 6+ hour test pipelines, and sprint-by-sprint code freezes that threatened critical OFGEM deadlines.

-

Business Impact: The programme risked missing the December 2023 regulatory deadline, potentially facing significant OFGEM penalties (historically £1.5-£15M for system operator failures). With the platform needing to manage over 3,100 daily balancing instructions for Britain's electricity grid, failure would have prevented the £15M annual consumer savings and blocked the UK's net-zero transition infrastructure.

-

Our Solution: Over 18 months, ClearRoute deployed two Quality Cloud Engineer pods - a distributed central team building capabilities for all 6 squads, plus embedded QCEs driving process change from within teams. We implemented a comprehensive test data factory for time-sensitive energy market data, transformed the anti-pattern test pyramid into a testing trophy approach, and embedded DevSecOps practices with 75% code coverage gates and comprehensive security scanning.

-

Tangible Result: The transformation enabled OBP to launch one week early with 99.9% availability. Test automation runtime dropped 96% from 6+ hours to 5 minutes, deployment frequency increased 3x, and unit test coverage reached 75% across all microservices. The platform now processes 2,527 MWh of daily battery dispatch (up from 659 MWh), directly enabling the UK's renewable energy transition.

The Challenge

Business & Client Context

- Primary Business Goal: Deliver the Open Balancing Platform to replace Britain's 1990s electricity balancing system, enabling net-zero carbon operability by managing thousands of small renewable energy units instead of centralised fossil fuel generators.

- Pressures: Critical OFGEM regulatory deadline of December 2023, potential multi-million pound penalties for delays, and the urgent need to support Britain's renewable energy transition as wind generation grew from 1GW to 20GW+ since 2010.

- Technology Maturity: While designed as microservices, the platform had devolved into a distributed monolith due to poor service boundaries, lack of contract enforcement, and teams deploying breaking changes without cross-team awareness.

Energy Trading Context: The Balancing Mechanism

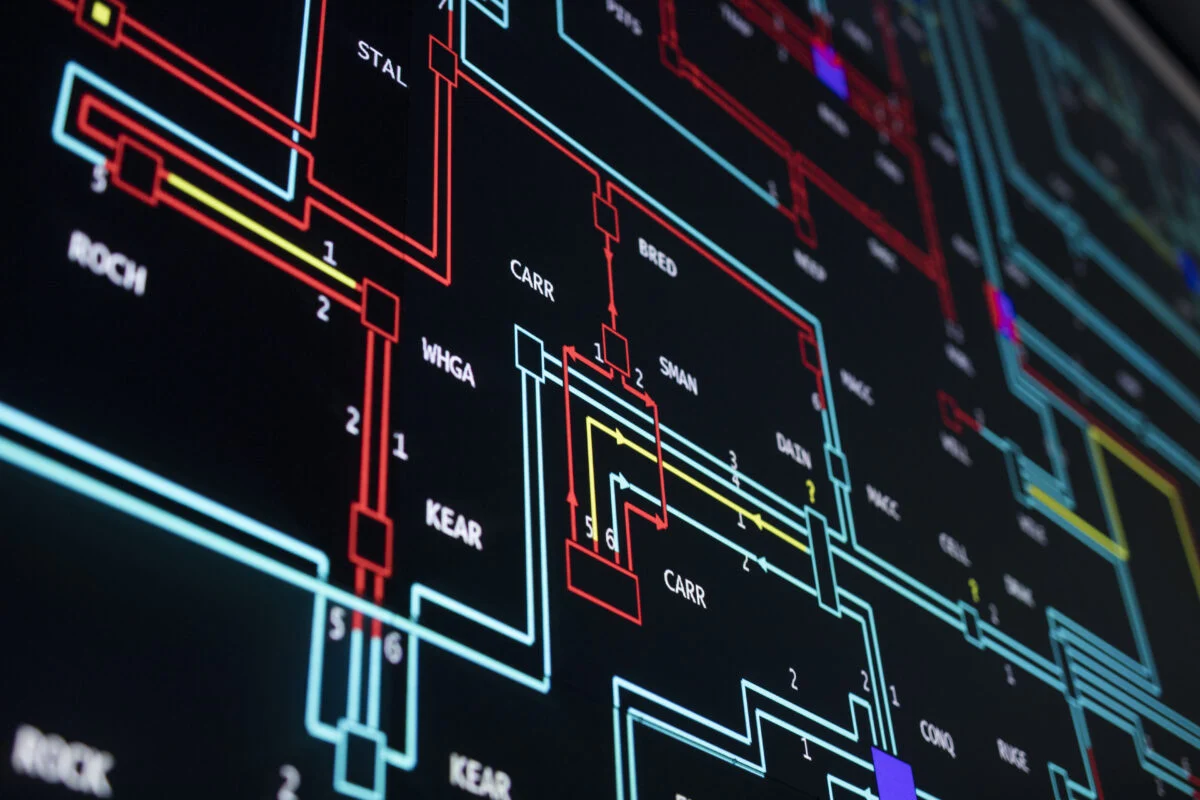

The Open Balancing Platform manages Britain's electricity balancing mechanism - a critical system ensuring supply matches demand every second. Understanding this context is essential to appreciating the testing complexity.

The Balancing Mechanism (BM) is NESO's primary tool to balance supply and demand on GB's network. Each trading period is 30 minutes long, with the auction gate opening 60 to 90 minutes before real time. Market participants submit "bids" or "offers" into the BM, and NESO issues Bid Offer Acceptances (BOAs) as instructions to change output accordingly.

In 2020, the ENCC issued 1,800 daily balancing instructions to market participants. This has now increased to 3,100 daily balancing instructions - an increase of 170%.

Key Business Functions:

- Real-time Grid Balancing: Electricity cannot be stored and must be manufactured at the time of demand, requiring second-by-second balancing of supply and demand.

- Market Optimisation: Using merit order to select the cheapest electricity options first, saving consumers money whilst maintaining grid stability.

- Renewable Integration: Managing thousands of small renewable generators (wind, solar, batteries) instead of traditional large fossil fuel plants.

- Regulatory Compliance: Meeting OFGEM requirements for system reliability, security, and cost-efficiency.

Understanding the Energy Trading Domain

To appreciate the testing complexity we faced, it's crucial to understand how Britain's electricity balancing mechanism works.

The Open Balancing Platform manages the Balancing Mechanism (BM)—a critical system ensuring electricity supply matches demand every second. Electricity cannot be stored at scale, so it must be generated precisely when needed.

Current State Assessment: Route to Live Findings

Our comprehensive Route to Live assessment revealed systemic issues across the delivery pipeline:

Pipeline Reliability Issues:

- Test Pipeline Success Rate: Only 12.6% (14/111 runs) in the System Testing environment

- Feature Test Pass Rate: Just 14% in system testing, with build verification tests failing since January 11th

- Test Execution Time: 6 hours for automated tests, with many requiring overnight execution

- Silent Failures: Platform team aware that security steps were failing silently, but pipeline steps still passed

Organisational Anti-Patterns:

- Definition of Done Misalignment: Programme stated "deploying working software to the System Integration Testing environment" but this wasn't being achieved due to inconsistent goals across squads

- Long Feedback Loops: Engineers deploying to the shared development environment waited over a sprint (92 days lead time) for feedback in SIT environment

- Massive Scrum Inefficiency: Daily scrum calls with 50+ engineers provided no productive value

- Silos Between Teams: QA&I team separation from engineering squads created handoffs and reduced change frequency

Cultural & Vendor Management Challenges:

- Entrenched Testing Partner Resistance: The existing testing automation vendor was heavily invested in their current Universal Automation Framework (UAF) approach, despite evidence of systemic failures. They resisted fundamental changes to testing strategy, viewing quality engineering transformation as a threat to their established processes and commercial position.

- Lack of Transparency: Test failures were often obscured or rationalized, making it difficult for NESO stakeholders to understand the true state of system quality and the urgent need for change.

- Competing Methodologies: The vendor's Java 8-based framework focusing on Jira ID descriptions rather than behavior validation was fundamentally incompatible with modern microservice testing approaches, creating an organizational conflict between legacy and modern practices.

Technical Architecture Flaws:

- BM Integration Coverage Gap: UAF Test Framework bypassed critical Adaptors layer, creating significant gaps in integration testing of XML-to-JSON conversion

- Test Data Factory Issues: Manual, time-consuming process with tooling cost overhead for creating realistic energy market data

- No Unit/Integration Tests: Many microservices relied entirely on time-consuming "user story tests"

- Legacy Framework: Universal Automation Framework using Java 8 (9 years old) with tests describing Jira IDs instead of application behaviour

Quality Engineering Gaps:

- No Code Coverage Reporting: Lack of visibility into test coverage across services

- No Quality Metrics: No way to determine system quality, defects, or bugs

- Manual Release Processes: Creating test cases, updating release logs, orchestrating releases are all manual activities, requiring time and effort from multiple stakeholders

- Inconsistent Pull Request Reviews: High volume of PRs merged without approval across repositories

Baseline Metrics from Route to Live Assessment

| Metric Category | Baseline | Notes |

|---|---|---|

| Test Pipeline Success Rate | 12.6% (14/111) | ST environment pipeline failures |

| Feature Test Pass Rate | 14% | Critical functionality largely untested |

| Test Execution Time | 6 hours | Some requiring overnight execution |

| Lead Time for Changes | 92 days | From dev-int to SIT environment |

| Deployment Frequency (ST) | Every 21.9 hours | 11 deployments per two-week period |

| Deployment Frequency (SIT) | Once per sprint | Manual verification required |

| Code Coverage | Not measured | No reporting or enforcement |

| Pull Requests Without Approval | High volume | Across multiple repositories |

Solution Overview

Engagement Strategy & Phases

- Phase 1: Route to Live Assessment & Discovery: Conducted comprehensive RTL mapping that revealed critical findings: 12.6% pipeline success rate, 14% feature test pass rate, 92-day lead times, and systemic organisational issues including 50+ person daily scrums and Definition of Done misalignment across squads.

- Phase 2: Test Strategy Foundation: Deployed distributed central QCE pod to design test data factory and microservice testing approach. Embedded QCEs into 2 pilot squads to prove transformation patterns.

- Phase 3: Organisation-Wide Rollout: Scaled embedded QCE approach across all 6 engineering squads. Implemented DevSecOps practices, service contracts, and testing trophy paradigm with SonarQube enforcement.

- Phase 4: Continuous Testing Platform: Built comprehensive platform for health evaluation, performance testing, and chaos engineering to support zero-downtime operations and 99.9% availability SLO.

Change Management & Vendor Collaboration Strategy: Rather than directly confronting the existing testing partner, we implemented a "transparency-first" approach that let the data speak for itself:

- Observable Test Results Dashboard: We made all test executions highly visible through comprehensive reporting dashboards that clearly showed failure rates, execution times, and root cause analysis in real-time.

- Comparative Metrics Visualization: Created side-by-side comparisons of the existing UAF framework performance versus our testing trophy approach, allowing stakeholders to see the dramatic differences in reliability and speed.

- Collaborative Pilot Approach: Worked alongside the existing partner on pilot squads, demonstrating new approaches without directly threatening their position, while building internal capability and confidence.

- Data-Driven Decision Making: Enabled NESO leadership to make informed decisions about testing strategy based on clear evidence rather than vendor assertions or internal politics.

Test Data Factory: Solving Energy Market Complexity

The energy trading domain presented unique testing challenges that traditional approaches couldn't handle:

Energy Market Time Sensitivity: The balancing mechanism relies on real-time data flow between NESO and market participants.

- Each trading period lasts exactly 30 minutes

- Auction gates open 60-90 minutes before real time

- Market participants submit "bids" (reduce output) or "offers" (increase output)

- NESO issues Bid Offer Acceptances (BOAs) as instructions to generators

BM Integration Architecture Challenge:

The Route to Live assessment revealed a critical gap in the integration testing approach. The existing UAF Test Framework was bypassing the crucial Adaptors layer that converts XML from the Balancing Mechanism to JSON for microservices.

This created a significant coverage gap for testing the critical XML-to-JSON conversion logic that processes real-time energy trading data. Our solution included testing through the actual Adaptors to ensure complete integration coverage.

Our Solution - Intelligent Test Data Library & Deployment Testing:

Created a new internal tool "Data Loader", that could orchestrate test data setup and integrate cleanly with our deployment test framework.

- Java-based Factory: Built a comprehensive library that teams could declare test data requirements against, supporting both production data available from the public Elexon API and synthetic generation

- Temporal Transformation: Automatically transformed historical energy data to be valid for current test execution windows using realistic time-series patterns

- Market Context Awareness: Generated realistic bids, offers, and grid state data matching actual trading patterns including BOD (Bid Offer Data), rate data, and physical notifications

- Dependency Management: Handled complex relationships between users, accounts, trading positions, and market conditions

- Deployment Test Framework: Built deterministic, data-agnostic tests using Playwright for UI validation and comprehensive API testing with Cucumber for behaviour-driven development

Example Data Factory Usage:

// From Elexon Production Data

List<DeclarationMetaData> declarations = List.of(DeclarationMetaData.builder()

.messageType("declaration")

.bmUnitToQuery(unitIdToEmulate)

.unitId(unitIdToCreate)

.elexonApiDeclarationStartTime(windowStartTime)

.elexonApiDeclarationEndTime(windowEndTime)

.build());

// Or Generate Synthetic Test Data

TestData testData = TestDataFactory.builder()

.withTradingPeriod(currentSettlementPeriod())

.withBatteryUnits(50, "charged")

.withWindGeneration("high")

.withDemandProfile("winter_peak")

.build();

Deployment Test Integration: The framework enabled teams to create comprehensive end-to-end tests that validated complete energy trading scenarios from market data through to grid instructions, with automatic cleanup and deterministic execution across all environments.

Testing Strategy Transformation: From Pyramid to Trophy

Before: Anti-Pattern Test Pyramid (Route to Live Findings)

- Heavy reliance on end-to-end tests with 14% pass rate in ST environment

- UAF automation tests described Jira work items instead of application behaviour

- No unit or integration tests in many microservices, relying on time-consuming user story tests

- Tests failed due to external dependencies and time-sensitive data, not application bugs

- Sequential execution due to shared test data dependencies requiring overnight runs

After: Testing Trophy for Microservices

- 75% Unit Test Coverage: Enforced via SonarQube quality gates that could fail builds and prevent deployments

- Robust Integration Testing: Pre-merge validation of service contracts and API compatibility including BM Adaptors layer

- Targeted E2E Tests: Focused on critical user journeys with isolated test data and realistic BM data replay

- Parallel Execution: Independent test data per service enabling concurrent validation

Service Contract Implementation

Contract Testing Approach:

- HTTP request/response validation in CI pipelines

- Automated contract compatibility checks before deployment

- Breaking change detection with immediate feedback to development teams

QCE Disciplines Applied

-

Quality Engineering: Transformed testing paradigm from manual, error-prone processes to automated, reliable pipelines. The testing trophy approach with intelligent test data factory enabled deterministic outcomes for critical energy trading functions whilst maintaining realistic integration coverage across NESO's complex microservice environment.

-

Platform Engineering: Delivered robust testing infrastructure using Red Hat OpenShift and Azure that standardised development experience across cloud and on-premises environments. The test data factory and continuous testing platform became reusable assets serving all 6 engineering squads with consistent, scalable capabilities.

-

Developer Experience: Eliminated sprint code freezes and reduced feedback cycles from 6+ hours to 5 minutes through intelligent test automation and contract validation. Embedded QCEs provided direct skill transfer on acceptance criteria, test placement, and tool adoption, enabling teams to own quality throughout the development lifecycle.

Mindset Transformation and Team Enablement

In addition to implementing technical solutions, ClearRoute played a pivotal role in transforming the mindset and fostering a culture of collaboration and quality ownership across all squads.

Shifting to the Left

We introduced Test-First and Shifting to the Left mindset to the squads, coaching and enforcing developers to write high-quality unit and component tests. This shift empowered developers to take ownership of quality early in the development lifecycle, reducing reliance on end-to-end tests and improving overall test coverage.

Coaching Quality Engineers

Quality Assurance (QA) engineers were coached to evaluate and distribute tests effectively across the testing trophie. Previously, QAs focused solely on writing end-to-end tests, leading to inefficiencies and gaps in coverage. Through our mentorship, QAs began collaborating with developers to ensure proper test distribution, balancing unit, component, integration and end-to-end tests. This alignment significantly improved the efficiency and reliability of the testing process.

Bridging Communication Gaps with 3-Amigo Sessions

A major challenge was the lack of collaboration during requirements gathering, which often resulted in incomplete or misunderstood requirements. To address this, we introduced 3-Amigo Sessions, bringing together developers, QAs, BAs and product owners to collaboratively discuss and refine requirements. These sessions ensured that:

- Requirements were comprehensive and accounted for all perspectives.

- Product owners, developers and testers had a shared understanding of the requirements.

- Test scenarios were clearly defined and agreed on.

Test Distribution Discussions

We facilitated Test Distribution Discussions to align on test coverage responsibilities. Teams collaboratively discussed and agreed on test scenarios, determining which scenarios would be covered by unit tests, component tests, integration or end-to-end tests. These discussions helped the team to achieve high test coverage while avoiding unnecessarily duplicated testing efforts. Expectations were documented in Gherkin format, providing clarity and traceability for all team members.

Results of Mindset Transformation

These coaching efforts led to a unified and efficient team dynamic:

- Requirements became more robust and aligned with business needs.

- Developers and testers worked collaboratively, reducing misunderstandings and missed scenarios.

- Testing shifted left, eliminating bottlenecks and enabling higher team velocity.

- The squads achieved a shared sense of ownership over quality, fostering a culture of continuous improvement.

The Results: Measurable & Stakeholder-Centric Impact

Headline Success Metrics

| Metric | Before Engagement | After Engagement | Improvement |

|---|---|---|---|

| Test Pipeline Runtime | 6+ hours | 5 minutes | 96% Reduction |

| Deployment Frequency | Variable | 3x Increase | 200% Improvement |

| Unit Test Coverage | Inconsistent | 75% All Services | Comprehensive |

| Code Freeze Frequency | Every Sprint | Eliminated | 100% Reduction |

| Project Delivery | At Risk | 1 Week Early | Ahead of Schedule |

| System Availability | Unknown | 99.9% | Production Ready |

Business Impact: Real Consumer Savings

Consumers are expected to save GBP 15 million per year in energy spending thanks to the enhanced system. When comparing the 3 months before OBP went live in December 2023 to the last 3 months of 2024, the average dispatch volume of batteries increased from 659 to 2,527 MWh per day. Daily instructions have increased from 217 to 1,867.

Value Delivered by Stakeholder

-

For the CTO / Chief Executive:

- Avoided potentially significant OFGEM penalties by delivering critical infrastructure one week early. (risk_mitigation: "Regulatory compliance achieved")

- Enabled £15M annual consumer savings through optimised energy balancing and grid efficiency. (business_value: "Direct consumer benefit")

- Positioned NESO as a leader in energy system transformation, supporting UK net-zero targets. (strategic_advantage: "National infrastructure leadership")

-

For the VP/Director of Engineering:

- Solved multi-year delivery challenges affecting 300+ engineers across 6 squads and 2 major service providers. (capability_building: "Organisation-wide transformation")

- Eliminated sprint code freezes and manual regression bottlenecks, dramatically improving engineering velocity. (team_productivity: "Continuous delivery enabled")

- Established reusable patterns and tooling that can be applied to future energy platform projects. (technical_assets: "Scalable architecture patterns")

- Resolved vendor management challenges through transparent, data-driven approaches that eliminated organizational conflict while preserving professional relationships. (stakeholder_management: "Evidence-based transformation")

- Provided clear visibility into testing effectiveness, enabling informed decisions about tooling and partnerships. (transparency_improvement: "Real-time quality visibility")

-

For the Platform Engineering / DevOps Manager:

- Delivered comprehensive testing infrastructure that reduced pipeline time by 96% whilst improving reliability. (infrastructure_efficiency: "Automated testing platform")

- Implemented DevSecOps practices ensuring security and compliance are embedded in delivery process. (security_improvement: "Built-in compliance")

- Created test data factory and continuous testing capabilities serving all microservices. (platform_asset: "Reusable testing infrastructure")

Client Testimonials

Recognition

The OBP team was recognised at the 2024 Computing.co.uk DevOps Excellence Awards, winning the award for Best DevOps Team of the Year.

Source: IBM Case Study on NESO

Lessons, Patterns & Future State

-

What Worked Well: The distributed central pod model combined with embedded QCEs provided both organisation-wide capability building and deep team transformation. The testing trophy approach proved essential for microservice architectures, moving quality validation closer to development whilst maintaining integration confidence.

-

Challenges Overcome: The "impossible" time-sensitive test data problem was solved through intelligent transformation rather than static data approaches. Sprint code freezes were eliminated by shifting validation pre-merge, fundamentally changing the risk profile from late-stage manual discovery to early automated prevention.

-

Cultural Change Management: When dealing with entrenched vendors or internal resistance, transparency and observable results are more effective than confrontation. Making test failures highly visible allowed stakeholders to reach their own conclusions about the need for change, avoiding the politics of directly challenging existing partnerships.

-

Key Takeaway for Similar Engagements: For any critical infrastructure platform, a comprehensive test data factory that understands domain constraints is not optional - it's a prerequisite for reliable automation. The testing trophy paradigm is specifically suited to microservice architectures where service boundaries matter more than end-to-end coverage.

-

Client's Future State / Next Steps: With the Open Balancing Platform successfully deployed and operating at 99.9% availability, NESO is positioned to continue expanding automated energy balancing capabilities. The testing infrastructure and quality engineering practices established provide a foundation for adding new renewable energy sources, storage technologies, and market mechanisms whilst maintaining system reliability and regulatory compliance. The platform's success in managing 2,527 MWh of daily battery dispatch demonstrates readiness for the UK's accelerating renewable energy transition.