Use Consul as a Load Balancer with Nginx

Use Consul as a Load Balancer with Nginx

In this post, we will see what Consul can do in terms of Service Discovery, Health Checking, and see how we can use its Key/Value store. We will create two nginx servers, serving two different pages. These pages will display keys from the K/V store of Consul using Consul Template. We will then dynamically load balance these two servers with a third Nginx server, which will only send traffic to the servers that are online. This will all rely heavily on Docker, and Registrator will be used to automatically (de-)register our services.

The theory

Consul

Consul has multiple components, but as a whole, it is a tool for discovering and configuring services in your infrastructure. It provides several key features:

* Service Discovery: Clients of Consul can provide a service, such as

apiormysql, and other clients can use Consul to discover providers of a given service. Using either DNS or HTTP, applications can easily find the services they depend upon.

* Health Checking: Consul clients can provide any number of health checks, either associated with a given service (“is the webserver returning 200 OK”), or with the local node (“is memory utilization below 90%”). This information can be used by an operator to monitor cluster health, and it is used by the service discovery components to route traffic away from unhealthy hosts.

* KV Store: Applications can make use of Consul’s hierarchical key/value store for any number of purposes, including dynamic configuration, feature flagging, coordination, leader election, and more. The simple HTTP API makes it easy to use.

* Multi Datacenter: Consul supports multiple datacenters out of the box. This means users of Consul do not have to worry about building additional layers of abstraction to grow to multiple regions.

Consul is designed to be friendly to both the DevOps community and application developers, making it perfect for modern, elastic infrastructures.

Taken directly from the official documentation, which is worth reading. We will make use of the Service Discovery, Health Checking and KV Store.

Nginx

NGINX is a free, open-source, high-performance HTTP server and reverse proxy, as well as an IMAP/POP3 proxy server. NGINX is known for its high performance, stability, rich feature set, simple configuration, and low resource consumption.

Taken from the official Wiki, we will use two Nginx containers to run as webservers, serving simple HTML pages, and we will run a third Nginx container as a load-balancer, to forward requests to the two webservers, or only the ones that are online.

Consul Template

This project provides a convenient way to populate values from Consul into the file system using the

consul-templatedaemon.The daemon

consul-templatequeries a Consul or Vault cluster and updates any number of specified templates on the file system. As an added bonus, it can optionally run arbitrary commands when the update process completes.

From the readme, we will use Consul Template to render the static HTML pages that will be served by the two Nginx webservers.

Registrator

Registrator automatically registers and deregisters services for any Docker container by inspecting containers as they come online. Registrator supports pluggable service registries, which currently includes Consul, etcd and SkyDNS 2.

From the documentation, made by GliderLabs, a group of devs creating cool and useful tools. Registrator will take care of registering our webserver services as they come online, and de-registering them as they go offline, automatically.

The infrastructure we will be aiming for

Where to run this?

We ran this in an AWS instance, and this blog post will be based on this fact.

We used a small instance, but this will run decently on a micro instance, which is in the free tier. We used Ubuntu 16.04 for the OS, and used the defaults for everything else.

This can run natively on your laptop, however be aware that for example Docker for Mac has many limitations in terms of networking. Using an AWS instance will give you the easiest experience.

Before you start…

Install Docker on your AWS instance

The first step will be to setup the Docker repository on your machine. You will then be able to install Docker.

sudo apt-get update

sudo apt-get -y install \

apt-transport-https; \

ca-certificates \

curl \

software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - &>/dev/null

sudo apt-get update

sudo apt-get install docker-ce

Download the images that you will need later on

We will make use of the official Consul and Nginx images, and will use the registrator image from Gliderlabs, so let’s download them ahead of time.

sudo docker pull consul:1.0.0

sudo docker pull nginx:1.13.5

sudo docker pull gliderlabs/registrator

Download Consul Template

We will use Consul Template to render our templates using keys from Consul’s Key/Value store, so we download Consul Template and install it ahead of time too.

wget https://releases.hashicorp.com/consul-template/0.19.3/consul-template_0.19.3_linux_amd64.tgz -O /tmp/consul-template.tar.gz

sudo tar -xvzf /tmp/consul-template.tar.gz -C /usr/local/bin

All sorted with the pre-requisites, let’s start.

Start the Consul Server

sudo docker run -d \

--name consul --net=host \

-p 8500:8500 \

-e 'CONSUL_LOCAL_CONFIG={"skip_leave_on_interrupt": true, "ui": true, "dns_config": { "allow_stale": false }}' \

consul:1.0.0 agent -server -bind="$(curl -s http://169.254.169.254/latest/meta-data/local-ipv4)" -client=0.0.0.0 -bootstrap

This will start a new container running Consul, and attach it to the port 8500 of your instance. Your Consul service will be bound to the private IP of your AWS instance, and listen to requests coming from anywhere. A Consul cluster composed of a single server will be bootstrapped.

For the detailed explanations:

docker run: run the docker container-d: daemon mode--name consul: give a human friendly name to our container--net=host: use the host's network, will make it easier for our containers to communicate with each other-p 8500:8500: link the port 8500 of our container with the port 8500 of our host, so that we can access the consul ui of our host from outside-e 'CONSUL_LOCAL_CONFIG={"skip_leave_on_interrupt": true, "ui": true, "dns_config": { "allow_stale": false }}': pass some configuration to consulconsul: we will use the official consul imageagent -server: we then pass options to our image, here we want to run the agent in server mode-bind="$(curl -s http://169.254.169.254/latest/meta-data/local-ipv4)": bind the consul server to the private IP of our instance to make it easier for all our containers to talk to each other-client=0.0.0.0: accept connections from anywhere-bootstrap: let the consul server be the only server

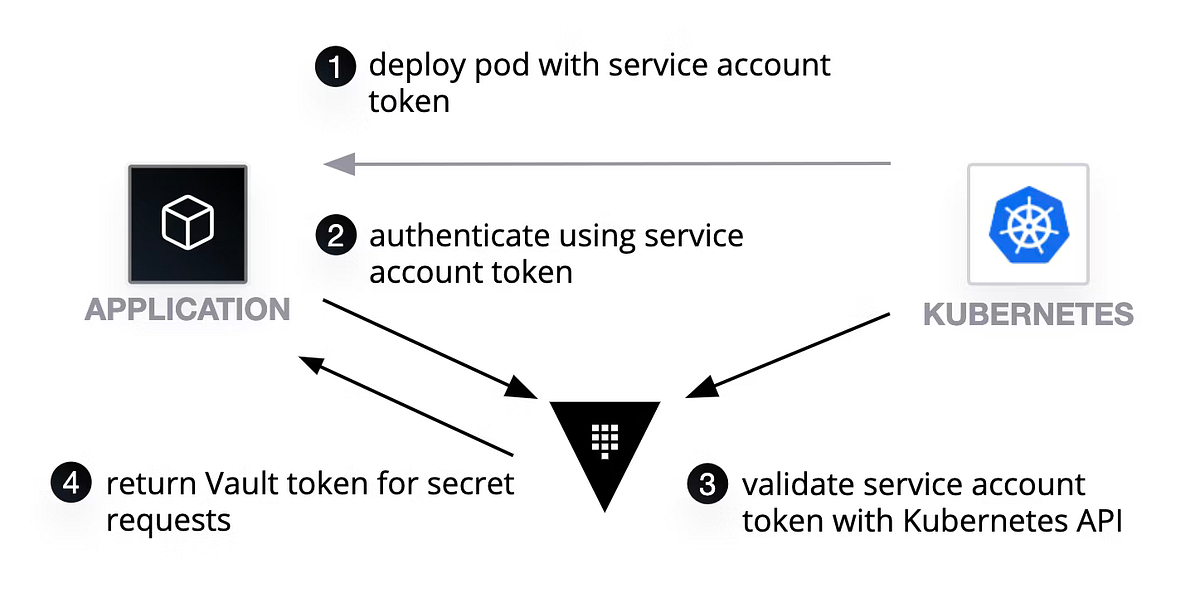

Start Registrator

sudo docker run -d \

--name=registrator \

--net=host \

--volume=/var/run/docker.sock:/tmp/docker.sock \

gliderlabs/registrator \

consul://localhost:8500

This will start a new container running Registrator, with access to the Docker API, and using Consul to register services.

Details:

--volume=/var/run/docker.sock:/tmp/docker.sock: Allows Registrator to access Docker APIgliderlabs/registrator: using gliderlabs’ registratorconsul://localhost:8500: registry URL, in this case Consul

Generate your index pages

Create your templates first

echo "Server Nginx 1, Name: {{ keyOrDefault \"playground/server1\" \"server1 name missing\" }}" >> /tmp/index.html.1.template

echo "Server Nginx 2, Name: {{ keyOrDefault \"playground/server2\" \"server2 name missing\" }}" >> /tmp/index.html.2.template

We create two simple HTML pages, {{ keyOrDefault \"playground/server1\" \"server1 name missing\" }}: will be replaced by consul-template with the value of the key playground/server1, or will be "server1 name missing" by default if no key with this name is present.

Render the templates using Consul Template

nohup consul-template \

-template "/tmp/index.html.1.template:/tmp/index.html.1:/bin/bash -c 'sudo docker restart nginx || true'" \

-template "/tmp/index.html.2.template:/tmp/index.html.2:/bin/bash -c 'sudo docker restart nginx2 || true'" &

We run consul-template in the background using nohup and pass our two templates. For each template that we want rendered, we pass three things:

- the input, our template

- the output, the rendered html page

- optionally a command that should be run every time one of the keys change

Here we render our templates into index.html.1 and index.html.2 to keep track of them easily, and we indicate that the containers that will be linked to them should be restarted if they are re-rendered. This will allow the page to be refreshed easily in the container. We add || true as our container isn’t running yet, so restarting it is not going to work, but we want consul-template to keep on running nonetheless .

Look at your rendered index pages

cat /tmp/index.html.1

cat /tmp/index.html.2

As you can see, the pages have been rendered by consul-template, but they are using the defaults that you provided in the templates. You will create the keys in a moment.

Start your Nginx web servers

sudo docker run -d -P --name=nginx -v /tmp/index.html.1:/usr/share/nginx/html/index.html -e "SERVICE_NAME=webserver" nginx:1.13.5

sudo docker run -d -P --name=nginx2 -v /tmp/index.html.2:/usr/share/nginx/html/index.html -e "SERVICE_NAME=webserver" nginx:1.13.5

Here we create two containers running nginx, and we give them a service name “webserver” for Registrator.

Details:

-P: make any ports used in the container available on the host, but let Docker handle it-v /tmp/index.html.1:-v /tmp/index.html.1:/usr/share/nginx/html/index.html: mount the/tmp/index.html.1file on our host as/usr/share/nginx/html/index.htmlin the container, which is the page that will be served by default as the root of the webserver-e "SERVICE_NAME=webserver": let registrator know that this container is running the service webserver, and it will automatically register it with Consulnginx:1.13.5: use the official nginx image version 1.13.5

Query your new nginx servers

Get the ports on which they are accessible:

sudo docker ps -f name=nginx

This will display your two new nginx containers, and see the port mapping as seen below, where nginx and nginx2 are respectively running on the ports 32768 and 32769.

You should now be able to query the servers on their respective ports, and see their content:

curl localhost:32768

Create the keys/values in Consul

Consul ships with a UI which we activated when we created the Consul server, so let’s use it to create the keys that our templates are looking for.

- Go to

http://<public IP>:8500/ui/, with <public IP> being the public IP of your instance. - In the top menu, click on the KEY/VALUE menu item.

- In the Create Key box, add the

playground/server1key and set its value to the name you want to give to your first server - Click the Create button

- Repeat steps 2 to 4 for the key

playground/server2

Consul UI with the two keys created

Query the servers again

The port will now have changed, so run sudo docker ps -f name=nginx again to see which ports are in use now.

curl localhost:32770 and you should see your keys working! You can change the values of the keys and see the result changing immediately.

Setup the Nginx Load Balancer

Create the nginx.conf template

cat <<EOT >> /tmp/nginx.conf.template

upstream app {

{{range service "webserver"}}server {{.Address}}:{{.Port}} max_fails=3 fail_timeout=60 weight=1;

{{else}}server 127.0.0.1:65535; # force a 502{{end}}

}

server {

listen 80 default_server;

resolver 172.16.0.23;

set \$upstream_endpoint http://app;

location / {

proxy_pass \$upstream_endpoint;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

}

}

EOT

We create a new template which is going to loop over all our services called “webserver” and add them to the list of servers in the upstream of our load balancer, and we then send the traffic to this upstream.

Here is what happens in details:

cat <<EOT >> /tmp/nginx.conf.template: will append everything that comes after this line and before the textEOTto the file/tmp/nginx.conf.templateupstream app { ... }: set the list of servers that can handle requests{{range service "webserver"}}server {{.Address}}:{{.Port}} max_fails=3 fail_timeout=60 weight=1;: loop over all our services "webserver", effectively our two nginx webservers, and add their address then port to the template{{else}}server 127.0.0.1:65535; # force a 502{{end}}: if there is no service called webserver, then our list will be empty, so we do this little trick in order to return a 502 to the userserver { ... }: configure our serverlisten 80 default_server;: listen on the port 80resolver 172.16.0.23: set the DNS to be the one from AWS, this is needed for dynamic load balancingset \$upstream_endpoint http://app;: we escape the $ here so that$upstream_endpointisn't interpreted by bash, and we let nginx know that the upstream endpoint is http://app, with app being one of the servers defined previouslylocation / { ... }: this block takes care of proxying the details of the requests when the root page is called

Render the nginx.conf file

nohup consul-template -template "/tmp/nginx.conf.template:/tmp/nginx.conf:/bin/bash -c 'sudo docker restart nginx-lb || true'" &

We run consul-template again, taking our template as an input, and generating nginx.conf as an output, and every time it will change, the nginx-lb container, which will contain the nginx load balancer, will be restarted, so that the new configuration can be used.

Output the content of nginx.conf

cat /tmp/nginx.conf

You can see the content of nginx.conf and see that it was rendered with the two currently running webservers in the upstream servers.

Test the template

sudo docker stop nginx2

For fun, you can stop the nginx2 container, then see that the configuration file will have changed.

cat /tmp/nginx.conf

You can then start the container again

sudo docker start nginx2

And see the content of nginx.conf once again

cat /tmp/nginx.conf

Start the load balancer

sudo docker run -p 80:80 --name nginx-lb \

-v /tmp/nginx.conf:/etc/nginx/conf.d/default.conf \

-e "SERVICE_TAGS=loadbalancer" -d \

nginx:1.13.5

You can now type your public IP (the one you were given on paper) in your browser and see your page. Here are a few things that you can try:

- Refresh several times, you will see the two pages alternate

- Change your key values in Consul UI, notice how the text changes in your browser

- Shutdown nginx or nginx2 (or even both!) and see what happens when you refresh your browser. (hint:

sudo docker stop nginx,sudo docker start nginx...) - Change your index templates, notice what happens. Can you figure out why?

Author’s notes

This a showcase of what sort of things can be done using Consul, not a production use-case. I encourage you to read through the documentation and other use cases as it can do much more.